DNS, the DevOps way

Gareth Dwyer delivers entertaining talks one could describe as tech talk standup. I very much enjoy them. He’s a brilliant software engineer and reignited my respect for technical writing. At a recent DevOps Cape Town meetup he presented a simple set of tasks and their realisation. In the end sparking a discussion with the question: “Is this the DevOps way?”.

As it is typical for backoffice applications, email plays a central role, which in turn means dealing with dangerously unusable web UI of rent-seeking local registrars. Uncertainty starts creeping up and how to get some DevOps in the mix.

Instead of defining DevOps culture and practices, let me set out two personal objectives I’d see for this case:

- Transparency. Avoid not knowing who changed what when.

- Reliability. Automate tests and deployments.

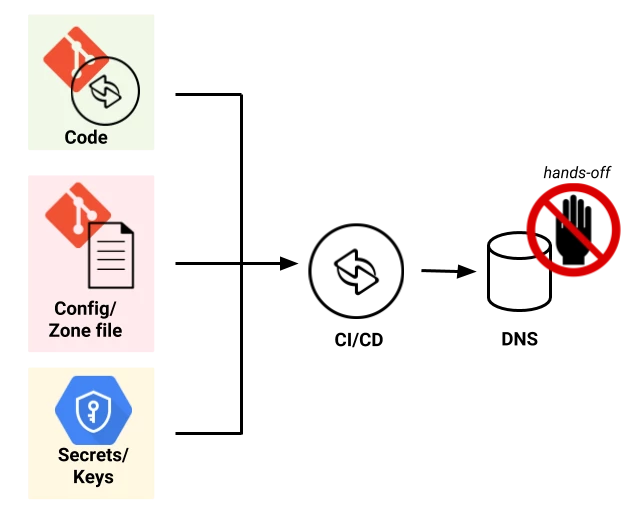

I’m a fan of “infrastructure as code” where you have everything originate from a git repo and let automation take care of the deployment. On the code side you reap the benefits in regards to permissions, review, change log, documentation. On the automation side one enjoys determinism and repeatability.

How could this look like for DNS?

In order to automate things you’ll need DNS with an API. Next to running your own BIND or djbdns, examples are: Amazon Route 53, Cloudflare, Dyn … or GCP Cloud DNS. With all GCP services, including Cloud DNS, you get the holy trinity of API, command line, and Web UI.

Here “DNS” is not “registrar”. You’d have your registrar point to a DNS service of choice.

In terms of CI/CD tooling everything is possible with GCP. I like GitLab and Travis very much and tend to opt for Cloud Build which naturally works well with GCP Cloud Source Repos where we will store the zone file for this example.

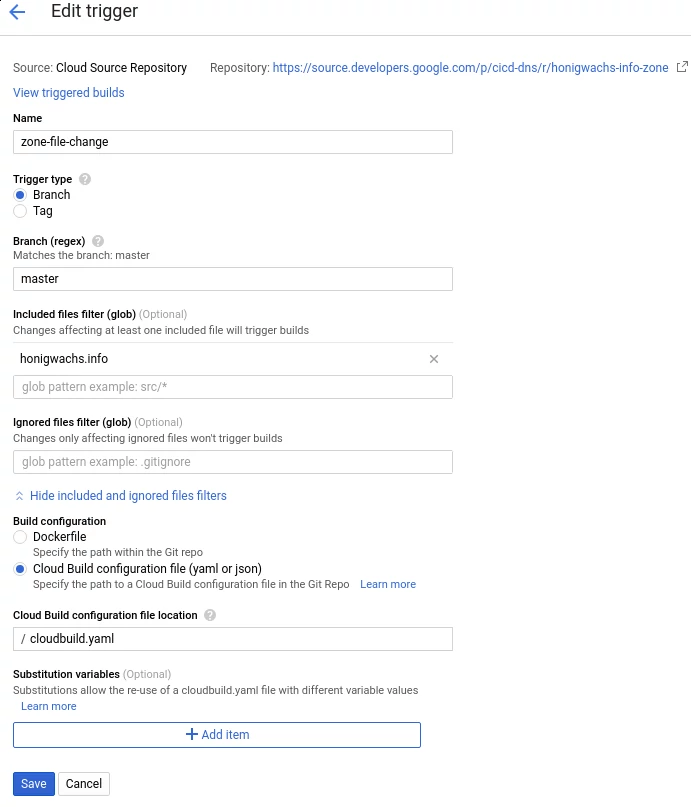

For simplest implementation we assign the DNS Administrator role to the Cloud Build service account within the project. Then we define a trigger for any push of the zone file to the master branch in the repo as follows:

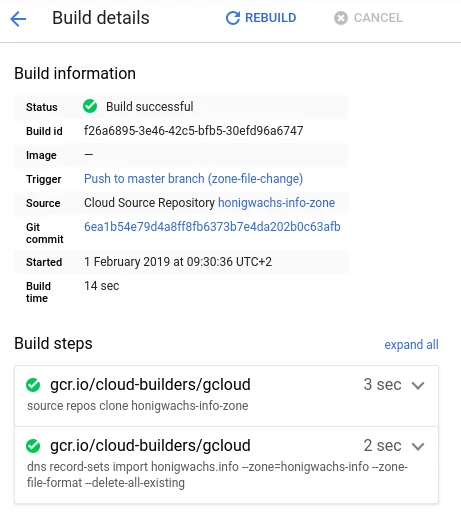

The above referenced cloudbuild.yaml looks as follows. Instead of assembling our own image we use the readily available gcloud cloud builder to clone the repo and import the zone file:

steps:

- name: gcr.io/cloud-builders/gcloud

args: ['source', 'repos', 'clone', 'honigwachs-info-zone']

- name: gcr.io/cloud-builders/gcloud

args: ['dns', 'record-sets', 'import', 'honigwachs.info', '--zone=honigwachs-info', '--zone-file-format', '--delete-all-existing']

The tool gcloud dns record-sets is quite nifty and validates your input files at execution. Dependent on your testing strategy, this already might be sufficient. With --zone-file-format we can use the BIND format instead of yaml and make use of bind9utils such as named-checkzone to test zone files. But do note that in this example the --delete-all-existing flag is set to include SOA info and allow for a “pure” BIND experience when dealing with the zone file.

Cloud Build publishes update messages to a Cloud Pub/Sub topic called cloud-builds which is automatically created when enabling the Cloud Build API. From there other actions such as notifications can be triggered. In general we’re talking about a very low deploy time and once it’s in GCP Cloud DNS we enjoy an astonishing propagation time we’re used to from Google ;-).

Oh, yes, this is what a BIND zone file looks like:

$ cat honigwachs.info

honigwachs.info. 21600 IN NS ns-cloud-b1.googledomains.com.

honigwachs.info. 21600 IN NS ns-cloud-b2.googledomains.com.

honigwachs.info. 21600 IN NS ns-cloud-b3.googledomains.com.

honigwachs.info. 21600 IN NS ns-cloud-b4.googledomains.com.

honigwachs.info. 21600 IN SOA ns-cloud-b1.googledomains.com. cloud-dns-hostmaster.google.com. 1 21600 3600 259200 300

www.honigwachs.info. 300 IN A 151.101.1.195

www.honigwachs.info. 300 IN A 151.101.65.195

yetanother.honigwachs.info. 300 IN A 151.101.1.195

©2018 Google LLC, used with permission. Google and the Google logo are registered trademarks of Google LLC.